AI-powered rendering (2026): tools, limitations, and why working with a professional matters

AI-powered rendering in 2026: tools, limitations, and differences from traditional CGI. Discover when AI works—and why a professional still matters

AI-powered rendering (2026): tools, limitations, and why working with a professional matters

In recent years, AI-powered rendering has evolved from a technological curiosity into a concrete tool within the worlds of 3D visualization, design, and marketing. By 2026, AI is capable of generating photorealistic images, visual concepts, and product variations in extremely short timeframes, reducing costs and significantly lowering barriers to visual content production.

However, despite the many advantages AI brings to image creation and 3D rendering, it is essential to understand when these tools represent a real opportunity and when they instead reveal structural limitations in terms of consistency, control, technical accuracy, and long-term reliability. It is precisely within this balance between speed and quality that the role of the professional and the specialized studio emerges, one capable of transforming AI from a simple image generator into a strategic tool within a solid, scalable creative process.

What is meant AI-powered rendering

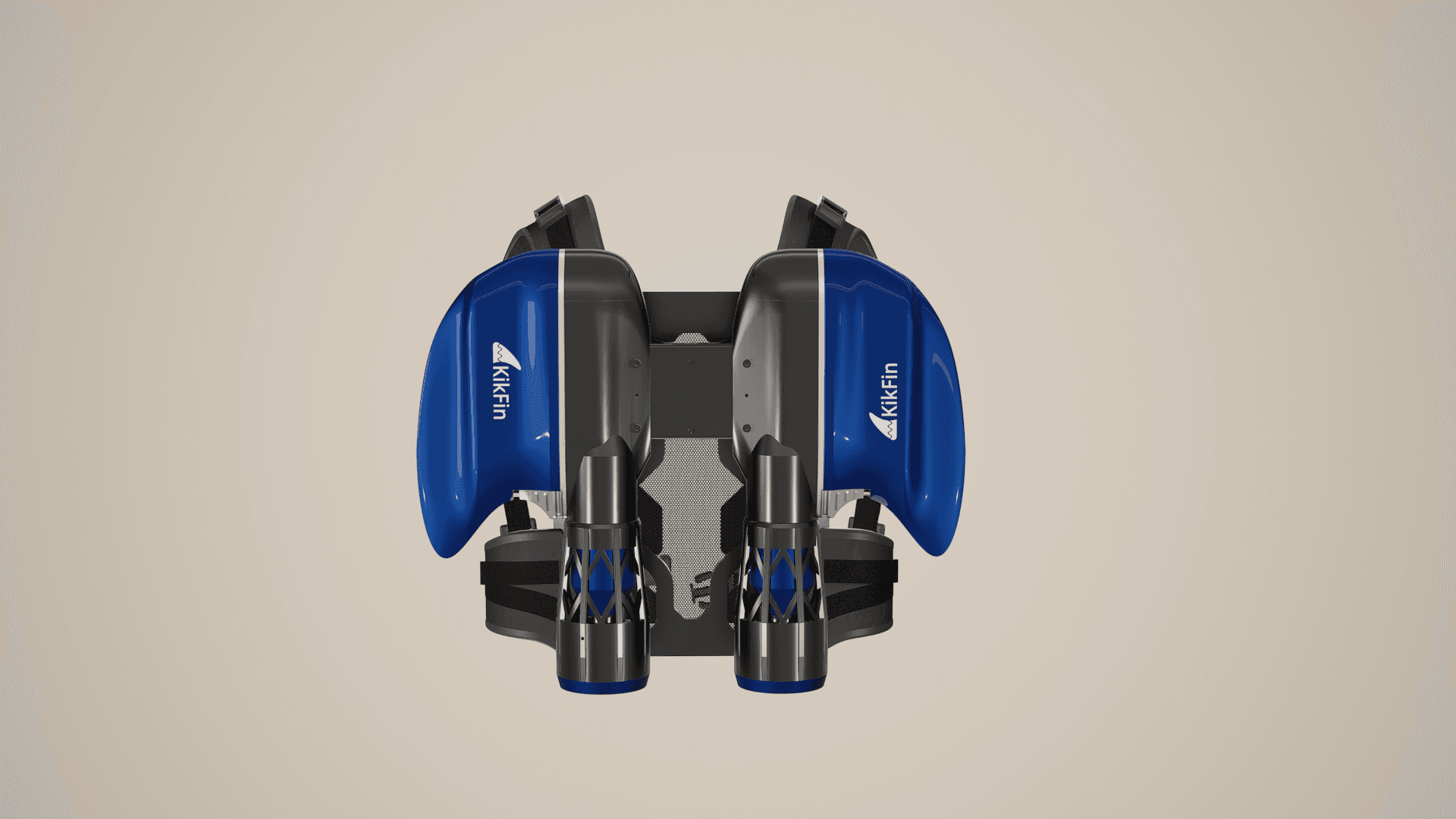

AI rendering refers to images generated or assisted by artificial intelligence algorithms capable of interpreting textual or visual prompts and transforming them into realistic representations of products, environments, or scenes. In many cases, these systems can simulate materials, lighting, and visual contexts without relying on a fully traditional 3D modeling process, drastically reducing production time and costs.

The key difference compared to traditional rendering lies in how the image is constructed. Classic CGI is based on real geometry, physically accurate materials (PBR shaders), and rendering engines that simulate light behavior in a mathematically verifiable way, using software such as Blender, Cinema 4D, 3ds Max, Maya, and Houdini, together with render engines like V-Ray or Redshift.

This approach makes the process extremely fast and flexible, ideal for exploratory phases, concept development, and visual testing. However, it also introduces structural limitations that become evident when moving from experimental use to professional, technical, or production contexts, where consistency, control, and reliability are critical.

AI rendering tools: an overview

By 2026, a wide range of tools are available for experimenting with AI-based rendering. Platforms such as Midjourney, DALL·E, and Stable Diffusion are widely used for creating visual concepts, moodboards, and inspirational imagery. Adobe Firefly, integrated into the Creative Cloud ecosystem, supports the generation and modification of textures, materials, and basic scenes, streamlining early design phases.

There are also tools such as Kaedim, Luma AI, and Spline AI, which aim to bridge the gap between 2D and 3D by transforming images or prompts into simplified 3D models. In parallel, AI-powered features are increasingly embedded within professional 3D software, including intelligent denoising, assisted material and environment generation, and render-time optimization.

All of these tools are extremely valuable for idea exploration and for accelerating certain creative stages. However, they are not designed to replace a complete professional production pipeline.

The limitations of AI-powered rendering

Despite significant progress, AI-powered rendering still presents relevant limitations, especially when used in professional, industrial, or brand-driven contexts.

1. Lack of technical and design control

One of the main limitations of AI rendering is low technical reliability. Generated images do not guarantee real-world proportions, structural coherence, or compliance with engineering specifications. A product may look correct at first glance, yet be unfeasible or inconsistent from a design, manufacturing, or functional standpoint.

This makes AI unsuitable for scenarios where precision, verifiability, and full control over the output are required.

2. Poor consistency across images and multiple views

Generating multiple images of the same object while maintaining perfectly consistent geometry, materials, details, and proportions is still extremely challenging. Even with precise prompts, small variations can alter shapes, surfaces, or key components. This is a concrete limitation for product catalogs, e-commerce, advertising campaigns, and technical presentations, where visual consistency is non-negotiable.

3. Lack of a structured production pipeline

Generative AI systems produce final images, not reusable assets. In most cases:

- there are no clean 3D models

- animations or product variants cannot be controlled

- no scalable foundation is created over time

Each image tends to be an isolated output, difficult to reuse or integrate into complex workflows or long-term communication strategies.

4. Limited scalability and integration into business processes

Because structured, reusable assets are missing, AI rendering struggles to integrate into:

- ongoing marketing pipelines

- training systems

- product configurators

- larger digital ecosystems

This significantly limits content scalability and reduces its long-term value.

5. Branding, copyright, and visual consistency risks

Another critical aspect concerns branding, copyright, and the protection of visual identity. Many artificial intelligence tools rely on datasets that are not fully transparent, increasing the risk of unintentional similarities with other brands, inconsistencies with a company’s established visual language, and issues related to originality and intellectual property ownership.

A recent case that clearly illustrates this dynamic is the viral #Rhodegirl trend: AI-generated images that closely replicate the aesthetic of an iconic brand like Rhode, despite having no official connection to it. In just a few clicks, an algorithm made an identity—built over time through art direction, narrative coherence, and strategic choices—replicable and appropriable. The result is a gray area in which it becomes difficult to distinguish authentic brand communication from simulation, with direct implications for image control, positioning, and public trust.

For established brands, this is not merely a legal issue but a reputational and strategic risk. When visual production is delegated to unguided generative models, the danger lies in the loss of uniqueness, recognizability, and consistency. Artificial intelligence can imitate a style, but it cannot safeguard—or protect—a brand identity.

For a more technical legal analysis, we recommend reading this article.

AI rendering vs traditional CGI: a difference in approach

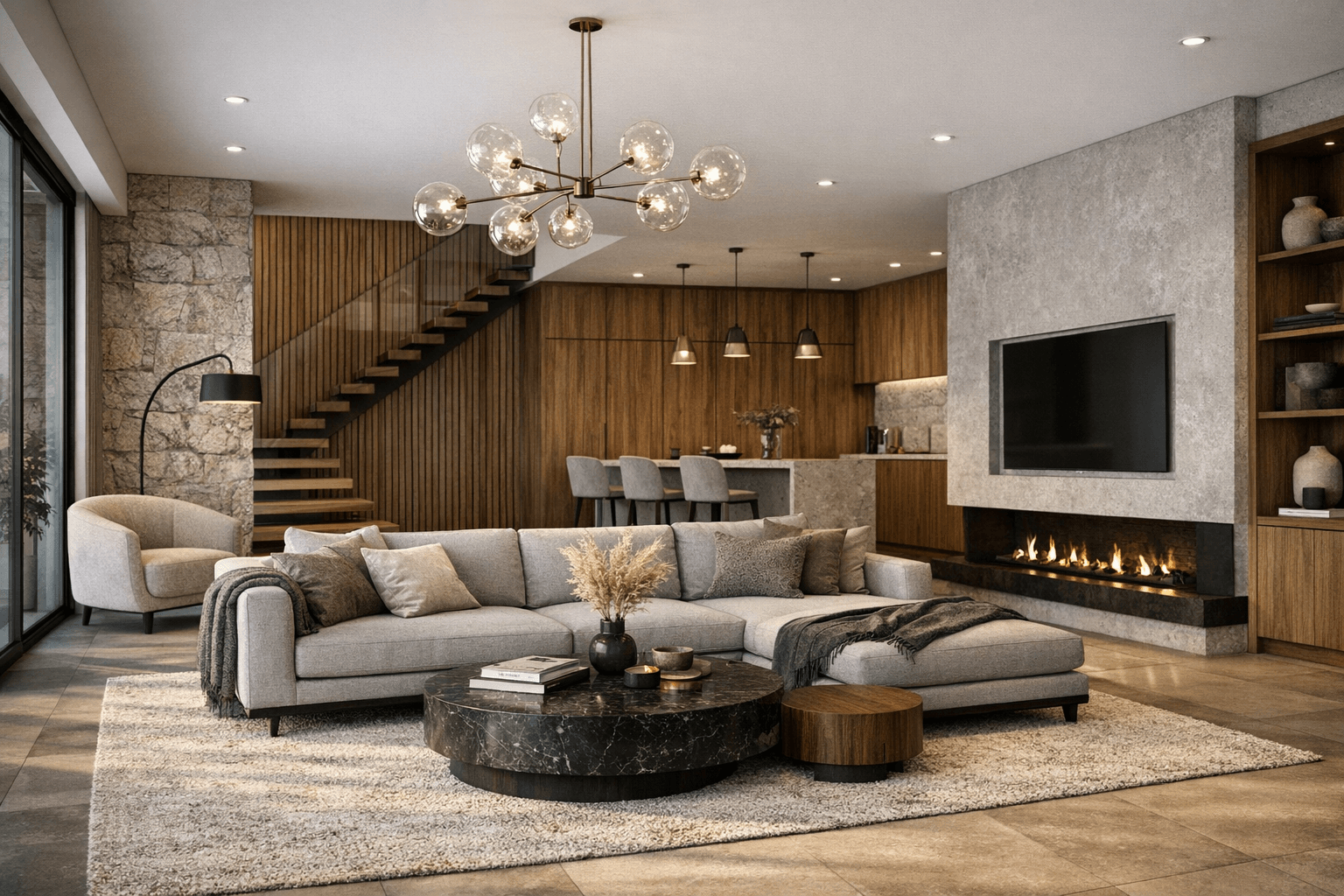

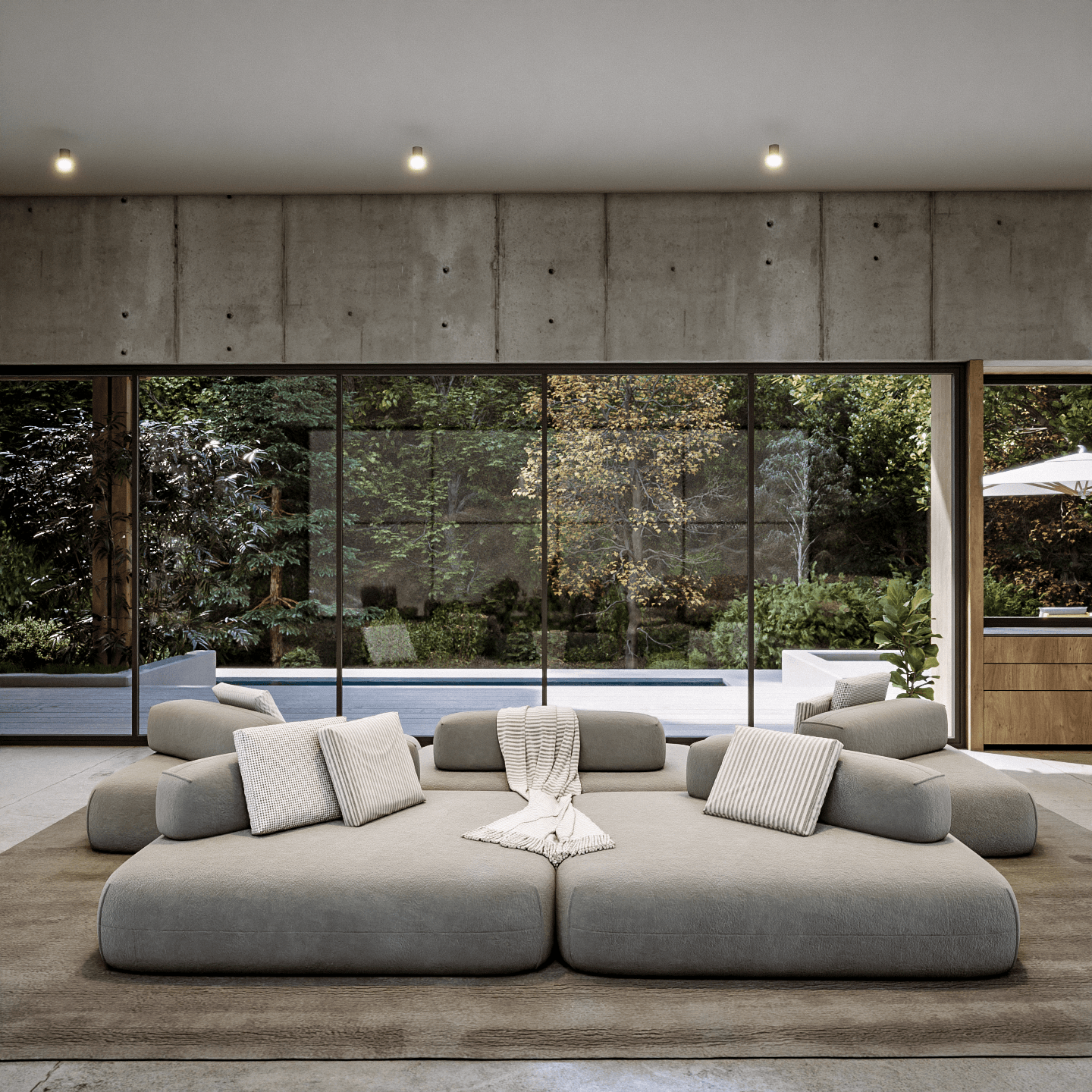

The comparison between AI rendering and traditional rendering in CGI is not about “better or worse,” but about approach. AI rendering offers speed, immediacy, and creative freedom, making it ideal for concept development and early project phases.

Traditional CGI, on the other hand, provides control, precision, and repeatability. By relying on real 3D models and physically accurate materials, it enables the creation of coherent, scalable, and reusable visuals for animations, configurators, advertising, and immersive applications.

In short, AI is a powerful tool when used at the right stage of the process, but CGI remains the most reliable choice when a project requires consistency, long-term usability, and real integration with the product or brand.

Why rely on a professional studio for AI-powered rendering

This is where the role of a studio like Ophir becomes essential. Using artificial intelligence does not mean replacing CGI—it means integrating it intelligently within a professional production pipeline, where each tool has a precise role.

At Ophir, CGI and AI work in synergy: CGI ensures technical accuracy, structural coherence, and full control, while AI is selectively used to accelerate and enhance specific creative phases, such as concept exploration, visual variations, mood definition, and stylistic testing. AI becomes a supporting tool, not a shortcut. The core of the work remains a solid, verifiable, and structured pipeline, capable of producing reliable assets over time.

Most importantly, a professional studio takes responsibility for the result. A tool can generate an image in seconds—but it cannot guarantee visual consistency, technical credibility, brand protection, or long-term strategic value. It is precisely in this balance between technology, expertise, and vision that the CGI–AI synergy reaches its full potential.

Conclusion: is AI rendering a tool or a shortcut?

In 2026, AI rendering is a powerful tool but not a universal solution. It works extremely well for rapid concepts, visual exploration, and early-stage ideation. It becomes risky when a brand is involved, a product must be real and manufacturable, or communication needs to be consistent and repeatable.

In these cases, the difference is not made by the tool, but by who uses it. This is exactly where a professional partner makes the difference. If you are considering AI for your renders and want to use it strategically, safely, and with clear business objectives, Ophir Studio can help you build a robust, scalable pipeline aligned with your brand and goals.

Get in touch to understand when AI is the right choice and when the expertise of a professional partner is essential.

Contact us!